Datacenter Computing

Here you'll find the laboratories for the Datacenter Computing Course. The first labs will guide you through some of the basic workloads in the datacenter, whereas the second part tackles tasks related to networking in the datacenter.

The Basics

The first lab will focus on the basics:

- how to use SLURM (most common workload manager for clusters such as supercomputers),

- Pytorch 101

- Solving the MNIST challange

Slurm

1. fep login

Login to the FEP cluster, over ssh:

$ ssh <username>@fep.grid.pub.ro

Replace <username> with the ID you use to login to moodle (something like mihai.dumitru2201).

Most likely, the login process will hang and you will see a message like:

Please login at https://login.upb.ro/auth/realms/UPB/device. Then input code YXAH-KGRL

Follow the instructions. This can get quite annoying, so you should enable secret key authentication. Follow the instructions here to see how to generate a key pair and how to add the public key to the remote server.

You can add the following to your ~/.ssh/config file to make the process

easier (from then on, login using ssh fep):

Host fep

Hostname fep.grid.pub.ro

User <username>

ServerAliveInterval 10

IdentityFile ~/.ssh/<private_key_used_on_fep>

IdentitiesOnly yes

2. Submitting a job

Create and run the following info.sh script, to get some information about the

machine you are running on:

#!/bin/bash

# Print the hostname

printf "Hostname: %s\n\n" $(hostname)

# Print the number of installed processors

printf "Number of processors: %s\n\n" $(nproc --all)

# Print the amount of memory available

printf "Total memory: %s\n\n" $(free -h | grep Mem | awk '{print $2}')

# Print the amount of disk space available

printf "Total disk space (for /): %s\n\n" $(df -h / | grep / | awk '{print $2}')

# Print information about the GPUs

if command -v nvidia-smi &> /dev/null

then

printf "GPUs available:\n"

nvidia-smi --query-gpu=gpu_name --format=csv,noheader

else

printf "No GPUs available\n"

fi

You are seeing information about the front-end processor itself, which is not designed for workloads, but rather serves as a gateway to the cluster.

Now let's run this on a compute node; run:

srun --partition=ucsx --gres gpu:2 info.sh

You should now see the details of the compute node you are running on.

How much GPU memory is available in total?

3. Getting more information about the cluster

To see a list of all the partitions available on the cluster, run:

$ sinfo -o "%10P %30N %10c %10m %20G "

Alternatively, you can inquire solely about a specific partition:

$ sinfo -p <partition_name> -o "%30N %10c %10m %20G "

Read more about the sinfo command

here (or by running man sinfo!).

Find out how many CPUs are idle, in total, over all nodes in all partitions.

4. Running jobs

You can simply launch an interactive shell on a compute node by running:

srun --partition=ucsx --gres gpu:2 --pty bash

Alternatively, you can replace --pty bash with the path to a script you want to

run.

However, srun is a blocking command, so you will have to wait for the job to

be accepted, then completed, before you regain control.

Because this is a shared environment, it might happen that the resources you

desire are not available at the moment.

So you might want to use the sbatch command to submit a job to the queue; when

resources become available, this will be automatically taken from the queue and

executed:

sbatch --partition=ucsx --gres gpu:2 --time=1:00:00 --wrap="bash info.sh"

Read more about srun here and about

sbatch here.

What does --gres gpu:2 mean?

Each job is identified by a specific "job ID", which is printed to stdout after submission. At any point, you can check the status of your job by running:

squeue -j <job-id> -o "%10i %10P %15j %15u %10T %M"

This shows its ID, the partition it is running on, the job name, the user who submitted it, the state of the job, and the amount of time it has been running for (if it is running).

Read more about the squeue command

here or from the man squeue.

In particular, running it without the -j <job-id> argument will show you all

the jobs running on the cluster.

For each job submitted, a file is created in the directory where the job

was submitted, with the name slurm-<job-id>.out; here, you will find the output

of the job.

Submit a job to the queue that takes more than 30 seconds to run, then prints a "hello" message; cancel it before finishing.

5. Running a chat LLM

We will now load a model on the GPU and run a chatbot using the transformers library.

Please install miniconda on fep following the tutorial here.

First, we need some dependencies; because this is a shared environment (so we can't install stuff globally) and because we'll be using cutting-edge stuff that's very volatile, we will create virtual environments, in which we can install whatever we want. If you move on to another application, which might need other, conflicting versions of libraries, we will create another environment and install stuff there.

For managing the virtual environments, we will use conda.

Here's the basics of creating a new environment, activating it and installing packages:

# Create a new environment

$ conda create -n llmchat python=3.11

# Activate the environments

# (You should see the name of the enironment in paranthese before the prompt)

$ conda activate llmchat

# Install the necessary packages for our llm chat

$ pip install torch transformers accelerate

$ conda install tmux

# Deactivate the environment (when done with it)

$ conda deactivate

Read more about conda here.

Now let's create a script, chat.py using huggingface's

transformers library, which

takes care of downloading, verifying, loading the model on the GPU etc.

Don't worry about the details for now, the script simply creates an endless

prompt-reply interactive session between you and the model.

#!/usr/bin/env python3

import time

import transformers

import torch

model_id = "meta-llama/Llama-3.2-1B-Instruct"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

if __name__ == '__main__':

while True:

user = input("Prompt: ")

messages = [

{"role": "user", "content": user},

]

outputs = pipeline(

messages,

max_new_tokens=2048,

)

print(outputs[0]["generated_text"][-1]["content"])

Run the script on a ucsx node and play around with it.

There are multiple ways to do this. You can either launch it directly:

$ srun --partition=ucsx --gres gpu:2 ./chat.py

Or launch an interactive shell and run it from there:

$ srun --partition=ucsx --gres gpu:2 --pty bash

$ ./chat.py

When you get to the compute note, you need to manually activate the environment

created previously.

This mode allows you to run tmux so that you can safely detach while keeping

your session and you can run multiple interactive shells at once on the same job.

See here for an intro to

tmux.

Use tmux to run watch -n0.5 nvidia-smi in one pane, then launch chat.py

from another and see how the GPU is used (note that whenever a new pane is

opened, you need to activate the environment again).

Write a non-interactive script that takes two arguments:

- a file containing a "system" message with special instructions for how the LLM should reply

- a file containing a prompt

The LLM's answer is printed to stdout. Submit this script to the queue.

IMPORTANT: The model you are using is automatically downloaded to

~/.cache/huggingface/hub/the first time you run the script (and is taken from there, without downloading, on subsequent runs). Even though the model is quite small, it still sums up to 2.4 GB, so we ask you to clean up your~/.cache/huggingface/hub/directory after you complete the lab.

Jupyter

Jupyter is a web-based interactive development environment, widely used in ML and data science. It enables us to quickly experiment with code, without having to rerun entire scripts from the top when something breaks. It also allows us to visually examine and inspect the data that we use, as well as information about the training process. Read more about it here.

We will use jupyter on the fep machines and forward the network traffic such

that we can access it from the local browser (but all the computation will be

done on the partitions on fep!).

Installing Jupyter

Activate the conda environment you want to use, and install jupyter:

$ conda install jupyter

It might take a while, as it will bring with it a lot of dependencies.

Running Jupyter

Before running jupyter, we need to determine the IP address at which the compute

node is accesible from fep.

Run:

$ ip a

And look for the first bond interface; it might look like:

17: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 6c:fe:54:48:3b:b8 brd ff:ff:ff:ff:ff:ff

inet 172.24.12.2/16 brd 172.24.255.255 scope global bond0

valid_lft forever preferred_lft forever

inet6 fe80::6efe:54ff:fe48:3bb8/64 scope link

valid_lft forever preferred_lft forever

The value we're interested in is right after inet (172.24.12.2 in this

case).

We need to run jupyter on a compute node with access to GPUs; srun an

interactive shell there and don't forget to activate the conda environment in

which you installed jupyter.

$ jupyter notebook --ip 0.0.0.0 --port <port>

Remember that there are other people using these machines at the same time, so we need to avoid conflicting ports. You can use the following bash function that generates a random, unused port (adapted from here):

randport() {

comm -23 <(seq 10000 65000) \

<(ss -tuan | \

awk '{print $4}' | \

cut -d':' -f2 | \

grep "[0-9]\{1,5\}" | \

sort | \

uniq) \

| shuf | head -n 1

}

So we can run:

$ jupyter notebook --ip 0.0.0.0 --port $(randport)

In the output generated, look for the lines that say something like:

[I 2024-10-22 11:04:27.600 ServerApp] Jupyter Server 2.14.1 is running at:

[I 2024-10-22 11:04:27.600 ServerApp] http://dgxh100-precis-wn02.grid.pub.ro:17872/tree?token=3a538e99683e78c740acaa560ad185fa16d59001bed8e17b

[I 2024-10-22 11:04:27.601 ServerApp] http://127.0.0.1:17872/tree?token=3a538e99683e78c740acaa560ad185fa16d59001bed8e17b

The relevant information here is the actual port and the hexadecimal token.

Accessing Jupyter

Now the jupyter notebook is running remotely on a compute node and we want to access it from our local browser.

We will use ssh to securely forward traffic from a port on the compute node,

using fep as relay.

$ ssh -L 8080:<address>:<port> <fep>

ssh will then leave you logged in to fep, but we can ignore this shell for

the following steps; just keep it open: once the session is closed, the port

forwarding stops.

Where <address> is the IP address of the bond interface of the compute node

and <port> is the randomly generated port on which jupyter is served.

<fep> represents your login; either <username>@fep.grid.pub.ro or

the name of a ~/.ssh/config entry that you previously configured.

For the examples we provided in this tutorial, the concrete command would look like:

$ ssh -L 8080:172.24.12.2:17872 mihai.dumitru2201@fep.grid.pub.ro

Now go to your local browser and access:

http://localhost:8080/tree?token=<hexadecimal token>

In the example from this tutorial, the correct URL would be:

http://localhost:8080/tree?token=3a538e99683e78c740acaa560ad185fa16d59001bed8e17b

In short, the effect of our ssh forwarding is that now the jupyter notebook is

available as if served locally on port 8080.

The token is there for authentication purposes, without it you will not be able

to access jupyter.

PyTorch 101

As we've discussed at the course, PyTorch is eagarly execute, meaning that we'll write normal Python programs. For the below tutorial, please start a new jupyter notebook and follow along in it.

Tensors and Variables

PyTorch Tensors are similar in behaviour to NumPy’s arrays.

>>> import torch

>>> a = torch.Tensor([[1,2],[3,4]])

>>> print(a)

1 2

3 4

[torch.FloatTensor of size 2x2]

>>> print(a**2)

1 4

9 16

[torch.FloatTensor of size 2x2]

PyTorch Variables allow you to wrap a Tensor and record operations performed on it. This allows you to perform automatic differentiation.

>>> from torch.autograd import Variable

>>> a = Variable(torch.Tensor([[1,2],[3,4]]), requires_grad=True)

>>> print(a)

Variable containing:

1 2

3 4

[torch.FloatTensor of size 2x2]

>>> y = torch.sum(a**2) # 1 + 4 + 9 + 16

>>> print(y)

Variable containing:

30

[torch.FloatTensor of size 1]

>>> y.backward() # compute gradients of y wrt a

>>> print(a.grad) # print dy/da_ij = 2*a_ij for a_11, a_12, a21, a22

Variable containing:

2 4

6 8

[torch.FloatTensor of size 2x2]

Core Training Step

Let’s begin with a look at what the heart of our training algorithm looks like. The five lines below pass a batch of inputs through the model, calculate the loss, perform backpropagation and update the parameters. (we won't run this code, we'll createa model instance below)

output_batch = model(train_batch) # compute model output

loss = loss_fn(output_batch, labels_batch) # calculate loss

optimizer.zero_grad() # clear previous gradients

loss.backward() # compute gradients of all variables wrt loss

optimizer.step() # perform updates using calculated gradients

Each of the variables train_batch, labels_batch, output_batch and loss is a

PyTorch Variable and allows derivates to be automatically calculated.

All the other code that we write is built around this- the exact specification of the model, how to fetch a batch of data and labels, computation of the loss and the details of the optimizer. In this post, we’ll cover how to write a simple model in PyTorch, compute the loss and define an optimizer. The subsequent posts each cover a case of fetching data- one for image data and another for text data.

Models in PyTorch

A model can be defined in PyTorch by subclassing the torch.nn.Module class.

The model is defined in two steps. We first specify the parameters of the

model, and then outline how they are applied to the inputs. For operations that

do not involve trainable parameters (activation functions such as ReLU,

operations like maxpool), we generally use the torch.nn.functional module.

import torch.nn as nn

import torch.nn.functional as F

class TwoLayerNet(nn.Module):

def __init__(self, D_in, H, D_out):

"""

In the constructor we instantiate two nn.Linear modules and assign them as

member variables.

D_in: input dimension

H: dimension of hidden layer

D_out: output dimension

"""

super(TwoLayerNet, self).__init__()

self.linear1 = nn.Linear(D_in, H)

self.linear2 = nn.Linear(H, D_out)

def forward(self, x):

"""

In the forward function we accept a Variable of input data and we must

return a Variable of output data. We can use Modules defined in the

constructor as well as arbitrary operators on Variables.

"""

h_relu = F.relu(self.linear1(x))

y_pred = self.linear2(h_relu)

return y_pred

The __init__ function initialises the two linear layers of the model. PyTorch

takes care of the proper initialization of the parameters you specify. In the

forward function, we first apply the first linear layer, apply ReLU activation

and then apply the second linear layer. The module assumes that the first

dimension of x is the batch size. If the input to the network is simply a

vector of dimension 100, and the batch size is 32, then the dimension of x

would be 32,100. Let’s see an example of how to define a model and compute a

forward pass:

#N is batch size; D_in is input dimension;

#H is the dimension of the hidden layer; D_out is output dimension.

N, D_in, H, D_out = 32, 100, 50, 10

#Create random Tensors to hold inputs and outputs, and wrap them in Variables

x = Variable(torch.randn(N, D_in)) # dim: 32 x 100

#Construct our model by instantiating the class defined above

model = TwoLayerNet(D_in, H, D_out)

#Forward pass: Compute predicted y by passing x to the model

y_pred = model(x) # dim: 32 x 10

Loss Function

PyTorch comes with many standard loss functions available for you to use in the

torch.nn module. Here’s a simple example of how to calculate Cross Entropy

Loss. Let’s say our model solves a multi-class classification problem with C

labels. Then for a batch of size N, out is a PyTorch Variable of dimension NxC

that is obtained by passing an input batch through the model. We also have a

target Variable of size N, where each element is the class for that example,

i.e. a label in [0,...,C-1]. You can define the loss function and compute the

loss as follows:

loss_fn = nn.CrossEntropyLoss()

loss = loss_fn(out, target)

PyTorch makes it very easy to extend this and write your own custom loss function. We can write our own Cross Entropy Loss function as below (note the NumPy-esque syntax):

def myCrossEntropyLoss(outputs, labels):

batch_size = outputs.size()[0] # batch_size

outputs = F.log_softmax(outputs, dim=1) # compute the log of softmax values

outputs = outputs[range(batch_size), labels] # pick the values corresponding to the labels

return -torch.sum(outputs)/num_examples

Optimizer

The torch.optim package provides an easy to use interface for common

optimization algorithms. Defining your optimizer is really as simple as:

#pick an SGD optimizer

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01, momentum=0.9)

#or pick ADAM

optimizer = torch.optim.Adam(model.parameters(), lr = 0.0001)

You pass in the parameters of the model that need to be updated every iteration. You can also specify more complex methods such as per-layer or even per-parameter learning rates.

Once gradients have been computed using loss.backward(), calling

optimizer.step() updates the parameters as defined by the optimization

algorithm.

Training vs Evaluation

Before training the model, it is imperative to call model.train(). Likewise,

you must call model.eval() before testing the model. This corrects for the

differences in dropout, batch normalization during training and testing.

Painless Debugging

With its clean and minimal design, PyTorch makes debugging a breeze. You can

place breakpoints using pdb.set_trace() at any line in your code. You can then

execute further computations, examine the PyTorch Tensors/Variables and

pinpoint the root cause of the error.

MNIST

To get a better understanding on the workloads of a datacenter, we are going to build a model to solve the MNIST challange. Please use the Jupyer Notebook from here.

2. The Transformer

Today's lab is about training our first simple transformer architecture on a GPU and multiple GPU.

First, we'll train it on a GPU and train a model to tell tiny stories. Please follow the jupyer lab

The task is to go through the notebook, and after this play with different model parameters. We'll modify the learning rate, model architecture (hidden dimension, number of layers), batch size, number of epochs. Try even increasing the model size to 5x parameters.

Note down at the end of the notebook a report on how this changes the performance of the model

2. Distributed Training

Moving from the previous example, we will now train a model on multiple GPUs to tell tiny stories. Please follow the jupyer lab

The task is to go through the notebook and at the end adjust the different parameters. Note down at the end of the notebook a report on how this changes the performance of the model

Remote direct memory access (RDMA)

Introduction

The guys from Mellanox had a dream:

what if an application can access another application's memory via the network, even without it knowing?

That's how the RDMA protocol was born.

At first, it was exclusive to Infiniband networks, provided by, you guessed, Mellanox.

But soon people got tired of Infiniband, and wanted something cheaper and easier to use.

RoCE and iWARP were born.

RDMA Protocol Implementations

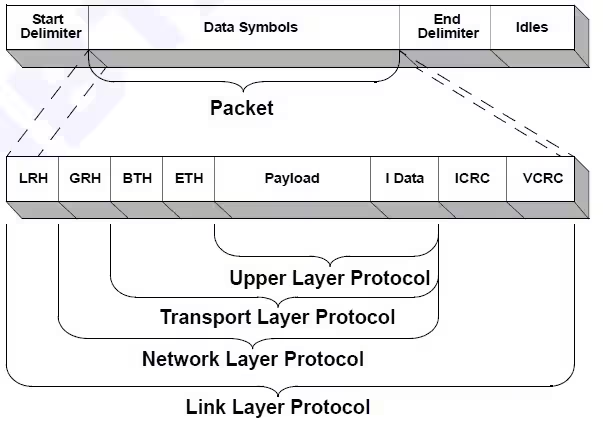

As you saw above, the RDMA protocol has many flavours, but all of them are essentially the Infiniband implementation, from which different headers are removed. The main implementations are:

- Infiniband

- RoCE

- iWARP

Infiniband

The OG, the RDMA implementation. Usable only on Infiniband networks, provided by Mellanox, now Nvidia. It looks something like this:

People nowadays use RoCE, which is, essentially, GHR, BTH, and ETH slapped onto an Ethernet header.

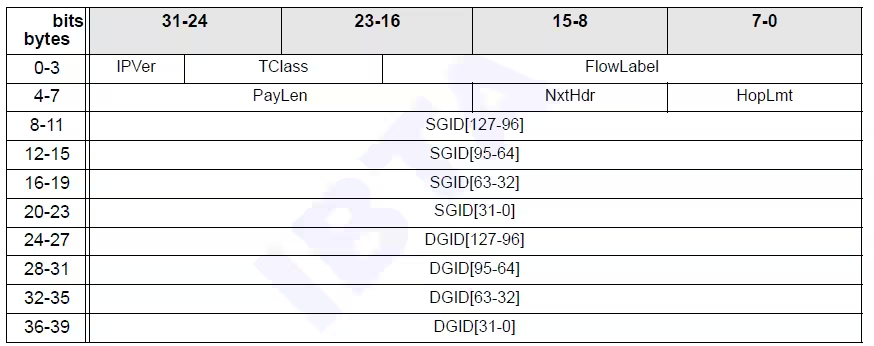

This is the GRH:

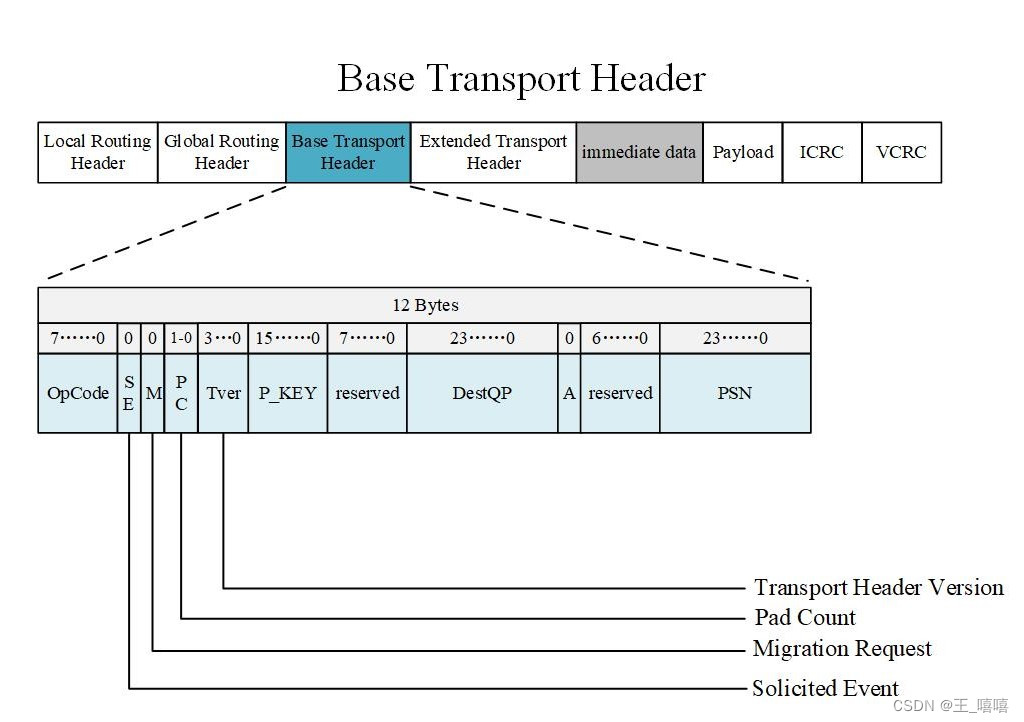

This is the BTH:

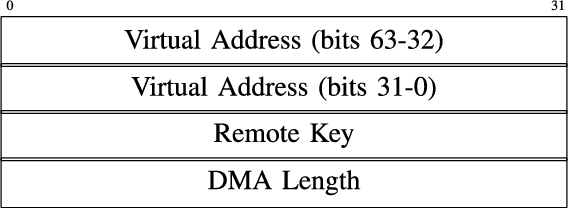

This is the ETH specific to RDMA, called RETH:

There is also AETH, the header used for ACKs.

You will be able to see it during this lab.

RoCE

How about we replace LRH (Local Routing Header) with Ethernet? We get RDMA over Ethernet.

Or, how the guys that had this thought first decided to call it, RDMA over Converged Ethernet.

Now we can do RDMA in Ethernet networks.

Hooray!

This version is called RoCEv1, and there is no reason why someone would use it today.

Think about it:

you have a MAC address and a GID.

But routers don't know about GIDs, they know about IPs, so you can only use RoCEv1 in L2 networks.

Not good.

So another protocol had to be developed.

Enter RoCEv2.

RoCEv2

How about we take it further?

Let's replace GRH with IP and UDP.

We get IPs and ports, things that the routers can actually use to route our packets in the network.

Much better.

We will be ignoring the problems that RoCE has, like the utter chaos that happens when a packet is lost, and the fact that the protocol designers originally thought that go-back-to-0 was a good ideea.

What is that?

If you lose one packet, you reset everything!

Doesn't matter that some packets reached their destination.

Bless the guys from Microsoft for pushing go-back-N.

iWARP

Now, a protocol not so used, but that exists:

iWARP.

Replace UDP from RoCEv2 with TCP and you have iWARP.

Is it a good idea? Yes, no more losses that create chaos. Do people use it? No.

RXE (Soft-RoCE)

Now, people start asking:

"What if I don't want to buy an expensive NIC, that implements one of the protocols from above?".

Someone tought about it, and came up with SoftRoCE, which is basically a software implementation of RDMA in the kernel.

That's what we will use today.

But first, what can RDMA do?

RDMA Operations

If TCP and UDP just carry a payload, that must be interpreted by the protocols

above, RDMA specifies what operation is performed, in BTH.

There are 3 relevant operations:

sendreadwrite

There is also a 4th category, atomics. We don't talk about it today.

Send

The best analogy for a RDMA Send is a normal packet from TCP or UDP:

- Someone must send it, someone must receive it and intrerpret it.

- Nothing else, no writing someone's memory without it knowing it.

Read

The first interesting one: the sender requests data from an address, and that data is sent asynchronously, without the receiving application knowing.

In order to do that, the sender must know a remote key, and that data must be at in special memory zone, registered beforehand as available for RDMA operations.

Now a question arises: can you read as much as you want?

The answer is yes, you can request as much as you want.

The response will be split into multiple packets, depending on the MTU of the RDMA interface.

The packet corresponding to the returned data will be Read Response First.

The last will be Read Response Last.

Everything else will be Read Response Middle.

If the data fits in only one packet, we will have a Read Response Only.

RDMA MTU

There is a difference between the Ethernet MTU and the RDMA MTU. If the Ethernet MTU specifies the maxcimum length of a packet that includes headers, the RDMA MTU specifies the maximum length of the payload.

Write

The second interesting one.

the sender sends data, that arrive at the receiver, and are written to a registered memory address, with or without the knowledge of the receiver.

Can you write as much as you want?

Yes.

Same thing as in the case of read.

ibverbs

Theory is nice and all, but how do we do that? Using what is commonly knows as

verbs. There is a library that allows an application to use RDMA, without

knowing which RDMA protocol is implemented by the NIC: ibverbs. How do the

operations from above translate to verbs?

ibverbs Send

Let's start with the easier one to understand: send.

In order for an application to send data, using the send operation, the

following need to happen:

- a RDMA device must be active and open

- a Protection Domain (PD) must be allocated

- a Queue Pair must be created; this queue pair contains 2 Completion Queues; one for sending packets, one for receiveing

- a Memory Region (MR) must be allocated; that region can have multiple permissions:

local write(the app that allocates it can write to it),remote read(a remote application ca read it) andremote write.local readis always there. - a Work Request (WR) must be created and posted; a Work Request can contain multiple Scatter-Gather Entries (SGE); each SGE specifies a local memory address, a length and a local access key.

The receiver must also have the device, PD, QP and MR allocated. When a RDMA Send is received, a Work Completion (WC) structure will be added to the CQ of the receiver. The receiver must poll and empty the CQ. The WC specifies if the data was sent correctly, if there is any Immediate Data, among other things.

Immediate Data?

What is that?

Some RDMA operations can add a new header, ImmData, to the packet, that contains raw data.

Any operation that has a ImmData header will generate a WC at the receiver, except Send, which will always generate a WC, and Read, which will never generate a WC at the receiver.

Read also doesn't accept ImmData.

So it is usefull only for the receiver knowing when a Write operation has finished.

ibverbs Write

The sender must have everything needed to perform a Send operation, with a

twist: the WR structure must also specify the remote memory address and the

access key of that address.

In the case of the receiver, a WC will be generated only if the Write has

Immediate Data in it. If not, the receiver won't know that a Write was

performed, unless it is notified in another way.

ibverbs Read

For the sender, it is the same as the Write. Things are different for the

receiver. Unless it is is notified another way, the receiver won't be notified

if a Read is performed on its memory.

Tasks

1: Lab Setup

In this lab you will use 2 virtual machines, that will communicate with eachother. A virtual mahine with all the needed packages is provided here. Make sure that the virtual machines can ping eachother.

For the lab to work, the virtual machines must be on a Bridged Network. If it doesn't work for you (looking at you, VMWare), try another hypervisor.

You can also do the lab on your native Linux, but you must find another person that wants to do the same thing, so you can speak RDMA to eachother. Or, if you are a networking god, you can use only one VM an pair with another fellow divine.

2: Create a RXE Interface

Use the following command to create a SoftRoCE (RXE) interface, replacing

sudo rdma link add <netdev>rxe type rxe netdev <netdev>

3: Inspect The Interface

There are a few commands to inspect a RDMA interface.

First, you can use rdma link show and ibv_devices to see if your interface is there.

Then, use ibv_devinfo -v to show details about the RDMA devices present on your system.

You will see a lot of output.

The important part is at the end: the description of the ports.

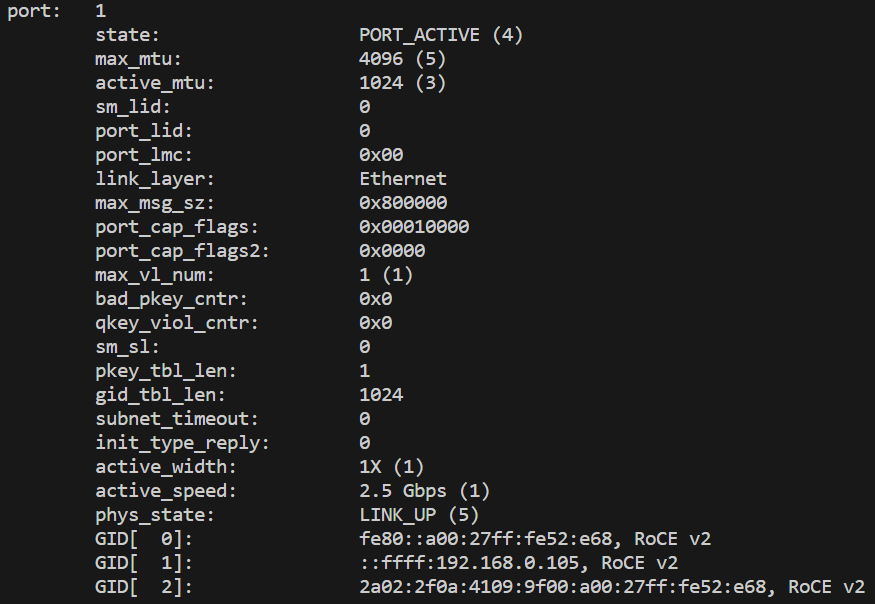

Your interface has only one port, so you should see something like this:

Some things are important here: state, active_mtu, and the GID table.

In the image you have an interface that uses both RoCEv1 and RoCEv2, so it will have 2 GID entries for each protocol.

Generally, a RoCEv2 entry will corespond to an IP address assigned to the network interface to which the RDMA device is linked.

Use ip a s to display details about the network interfaces that your system uses.

Observe the connection between GID entries and IP addresses.

For RoCEv2, the GID entry will be either the IPv6 address, or ::ffff:<IPv4 address>.

Remember the index of the GID entry for the IPv4 address;

you will need it later.

4: Do Some RDMA

Now, let's generate some RDMA traffic, using some standard tools: ib_write_bw and ibv_rc_pingpong

4.1: ibv_rc_pingpong

ibv_rc_pingpong will do a simple ping back and forth, to test the connectivity.

On one system, run:

ibv_rc_pingpong -d <rxe_interface> -g <gid_index>

Notice you need a GID index. Use the one for the IPv4 address.

On the second system, run:

ibv_rc_pingpong -d <rxe_interface> -g <gid_index> <ip_of_first_system>

4.2: ib_write_bw

ib_write_bw will measure the bandwidth of a RDMA connection, for write operations.

On one system, run:

ib_write_bw -d <rxe_interface> -x <gid_index>

On the other, run:

ib_write_bw -d <rxe_interface> -x <gid_index> <ip_of_first_system>

There also other tools, like ib_write_lat, ib_read_bw, ib_read_lat, ib_send_bw, ib_send_lat.

The _lat tools measure the latency of one operation.

5: Dump Some RDMA Traffic

Normally, intercepting RDMA traffic is a pain.

But, because we use SoftRoCE, all the packets go through the Linux kernel, and tcpdump can see them.

Use tcpdump to dump the traffic, while you use one of the tools from above.

Use Wireshark to inspect the capture.

6: RDMA Interface Statistics

Sometimes stuff doesn't work, and no one knows why.

That's why there are hardware counters available, to shed some light.

Usually, you can find them in /sys/class/infiniband/<rdma_dev>/ports/1/hw_counters/.

Some drivers also provide additional drivers in /sys/class/infiniband<rdma_dev>/ports/1/counters/, but that's not our case.

List those counters and try to find what they mean.

7: Write A RDMA Application

Ok, enough using other people's applications.

Time to get your hands dirty, and write an application that does RDMA.

To do that, you must use the ibverbs library.

The VMs already have it installed.

7.1: Setup the Connection

In order for any 2 applications to speak RDMA to eachother, a few things must happen:

- each application must open a RDMA device

- each application must create one or more QPs (Queue Pairs)

- each application must register the memory it's going to use for RDMA operations

- the 2 applications must exchange at least the folloiwng things: the numbers of the used QPs, the GID, the addresses of the registered memory and the remote access keys of that memory

You have to do just that.

Follow the comments in ibverbs/main.cc.

If you get stuck anywhere, the reference implementation is in ibverbs-sol.

And google (especially rdmamojo) is your friend for this one.

Oh, and one more thing:

the RDMA drivers really hate it when you don't free the resources you use

7.2: Do a Send

Now that all the structures are set up, you can do a RDMA Send. As before, follow the comments. If you feel adventurous, do a Send With Immediate.

7.3: Do a Write

Now do a RDMA Write. You know the drill.

HTSim

htsim is an open-source network simulator optimized for large-scale datacenter topology simulation. It was initially developed at University College London, than University Politehnica of Bucharest and more recently by Broadcom.

The github page for htsim is here: https://github.com/Broadcom/csg-htsim

To get started with htsim, first the repo and run make in the sim directory. This will build the entire codebase. If you want to extend htsim, you can find information about the source code in the associated wiki.

Running experiments with htsim

The main files to run experiments are in the datacenter directory. There is a htsim_* executable for all the transport protocols htsim currently supports. Each of these setup a variant of a FatTree topology (with configurable number of Nodes), create transport connections and then run a traffic pattern that is either fixed (a permutation for htsim_tcp) or given as a parameter (htsim_ndp, htsim_eqds, htsim_roce, htsim_swift). Each of these main files accepts a range of parameters that control the queue size, initial window, and many more. Have a look at the source code for a definitive guide. Creating a connection matrix

The connection_matrices directory contains python files to generate connection matrix files which can be used as inputs to the experiments.

For instance, to generate a permutation traffic matrix for a network of 16 nodes, with 2MB flows you can run:

python3 gen_permutation.py perm_16n_16c_2MB.cm 16 16 2000000 0 0

To generate the same permutation traffic matrix but with infinite flows run:

python3 gen_permutation.py perm_16n_16c.cm 16 16 0 0 0

Run the script without any parameters to see how to generate custom permutation cms.

After this, we have two traffic matrices one with 2MB flows and one with infinite (0 sized) flows. Examine the files to understand their format.

Running NDP

To run an NDP experiment, a required argument is the strategy for packet-level multipathing which can be perm (source routed, near-perfect), ecmp (virtual paths given with -paths X), ecmp_host (similar but with actual switches doing hashes), ecmp_ar - adaptive routing.

To mimic ECMP, we will be using ecmp_host . Example to run permutation with ECMP, 16 paths,mtu 4K,initial window of 50 packets, 50 packet switch queues:

./htsim_ndp -nodes 16 -tm connection_matrices/perm_16n_16c.cm -cwnd 50 -strat ecmp_host -paths 16 -log sink -q 50 -end 1000 -mtu 4000

To see the average flow throughputs use * ../parse_output logout.dat -ndp -show

Exercise 1. Plot the flow throughput distributions when using 1,4,8,16 and 32 paths.

In our next exercise, we will run the same traffic matrix but with limited size flows of 2MB. We will be using the first TM file we generated, the one named _2MB.cm:

./htsim_ndp -nodes 16 -tm connection_matrices/perm_16n_16c.cm -cwnd 50 -strat ecmp_host -paths 16 -log sink -q 50 -end 1000 -mtu 4000

Grep "finished" in the output to find the flow completion times.

Exercise 2. Plot the distribution of flow completion times when using 1,4,8,16 and 32 paths.

Exercise 3. Run the same experiment for Swift as in Exercise 1 and compare the results to NDP with 1 path.

Exercise 4. Run a permutation using htsim_tcp. Study the parameters to understand how to obtain similar results to the ones above.